Samsung’s 100x zooming smartphone camera requires a fancy lens and impossibly steady hands

Samsung’s new Galaxy S20 Ultra promises massive reach, but good luck holding it steady.

Yesterday, Samsung threw a big party on the west coast to debut its new smartphones. The flashy Galaxy Z Fold with its flexible glass screen stole most of the spotlight, but the company also announced new flagship phones. The Galaxy S20 comes in three versions, all of which have fancy new 120 Hz refresh rate screens and even 5G connectivity. Of those, the S20 Ultra boasts the burliest camera in the bunch. The wide-angle lens funnels light into a 108-megapixel sensor, while the built-in telephoto lens promises the equivalent of a 100x zoom. That’s an impressive number, but it comes with a few caveats.

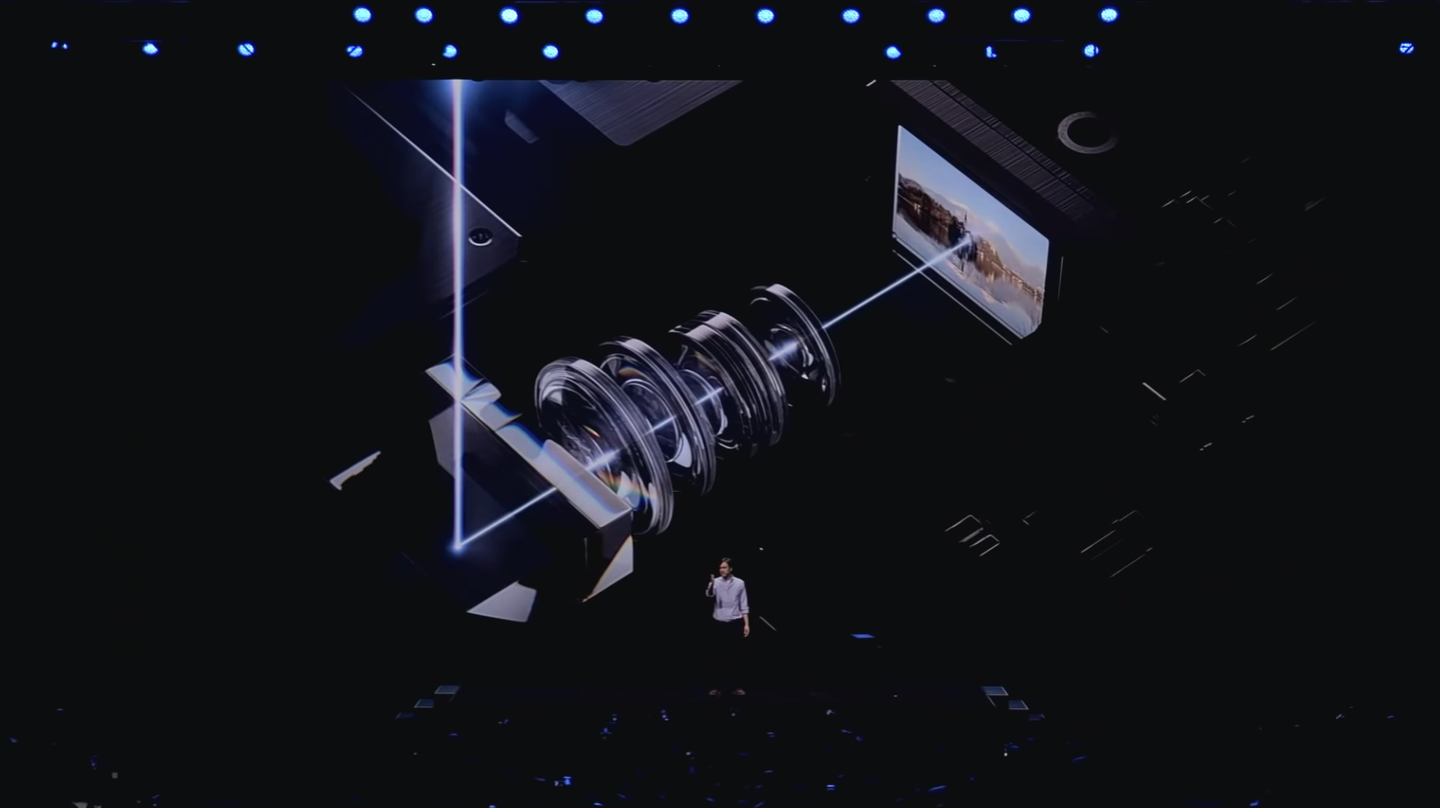

The term “zoom” has taken on two different meanings in the digital camera era. The traditional version of zooming involves physically moving around glass elements within the lens itself to change the appearance of light hitting the sensor. This is an uncommon feature in smartphones because it requires physical space, but it has shown up in some models like the Huawei P30 Pro and the Oppo Reno. Like those phones, the S20 Ultra uses a clever periscopic system that mounts the lens vertically inside the phone’s body, then uses refraction to bend incoming light to give the sensor a view of the outside world.

There are 100x optical zoom lenses out in the world—some even go beyond that. But, physics requires those lenses to occupy enormous amounts of space. Consider a Canon Digisuper 100 lens used for high-end TV broadcasts like you see during sporting events. It measures two feet long, 10 inches tall, and weighs roughly 52 pounds. It also costs close to $200,000 because of the amount of electronics and glass inside to achieve the desired effect. That’s not very practical for a phone.

The zoom lens inside the Galaxy S20 Ultra only actually optically zooms 4x using the lens itself. From there, it ventures into the complicated world of “digital zoom.”

Zooming beyond the range of a camera’s lens used to be relatively simple. Companies simply cropped in on the image like you’d do in Photoshop, or just by pinching and zooming with your fingers on an image in your camera roll. The resolution of the final image dropped, the image quality suffered, and you typically ended with a worse image than if you had just cropped it in post.

Eventually, digital zoom began to improve. Cropping in on the pixels made them more apparent and left photos looking noisy and grainy. A technique called pixel binning, however, allows manufacturers to group clusters of pixels together and have them act as a single unit, capturing more light and producing a final product with lower resolution, but higher overall fidelity.

Artificial intelligence also now plays a pivotal role in digital zoom. Cameras capture several images every time you press the button to snap a photo and they can use some of that extra data to help overcome the inherent downfalls involved with pushing a camera sensor past its natural abilities.

In the S20 Ultra’s case, the camera module has a ton of extra data to work with. The sensor behind the zoom lens checks in at 48 megapixels and the main camera has that beefy 108-megapixel chip tucked inside. In order to help optimize performance when zooming, the phone pulls info from both those cameras to try and capture as much detail as possible.

Still, that 100x number is pushing the limits. Even during Samsung’s on-stage demo, it was clear that there’s a considerable loss of sharpness and detail when you try to stretch out that far. Yes, it gives you a point of view that’s otherwise impossible with other smartphone cameras—and the vast majority of dedicated cameras—but taking advantage of all that range will be tricky for users.

When you zoom in on a subject, you’re narrowing your field of view. As you move toward the telephoto end of things, it becomes increasingly more difficult to capture an image without camera shake blurring into the picture. Internal vibration reduction systems use both physical movements within the lens and digital compensation to prevent motion blur, but they can only do so much.

With the 100x lens’s field of view approximating what you’d get from a telescope, hand-holding that long of a lens is nearly impossible. Even on a tripod, the simple vibrations from pressing the button to take the picture would likely be enough to shake the camera and cause some blur. If Samsung introduces this 100x zoom feature on the next version of the Galaxy Note, the remote camera activation capabilities baked into the S Pen stylus will come in handy for taking a picture without having to actually tap the screen.

The zoom lenses almost always have smaller apertures than the non-zooming (or prime) lenses, which requires longer exposures and introduces even more potential for blur. In the S20 Ultra’s case, the telephoto camera has a maximum aperture of f/3.5 which loosely means that it’s only letting in about a quarter of the light compared to the f/1.8 main camera lets through. That makes the sensor and the AI-powered multi-shot modes work a lot harder.

So, while you probably won’t want to use the 100x zoom very often, this is a big jump in terms of mainstream smartphone camera technology. Zoom has typically been a shortcoming for smartphones, but if these periscopic lenses catch on, we can reasonably expect the technology to improve rather quickly. Until then, maybe pick up a tripod with a smartphone adapter before you go out zooming.