Shrink-ray engaged: Researchers demo a camera no larger than a grain of salt

This tiny metasurface camera promises new possibilities for medical imaging/robotics and an end to unsightly smartphone camera bumps.

With the rise of smartphones and mirrorless cameras, photography gear has gotten more compact over the past decade. But for some uses, like medical imaging and miniature robotics, current camera tech still proves far too bulky. Now, researchers have pushed the boundaries of what’s possible with an experimental camera that’s similar in size to a grain of salt and yet offers image quality that’s an order of magnitude ahead of prior efforts on a similar scale.

What is a metasurface camera?

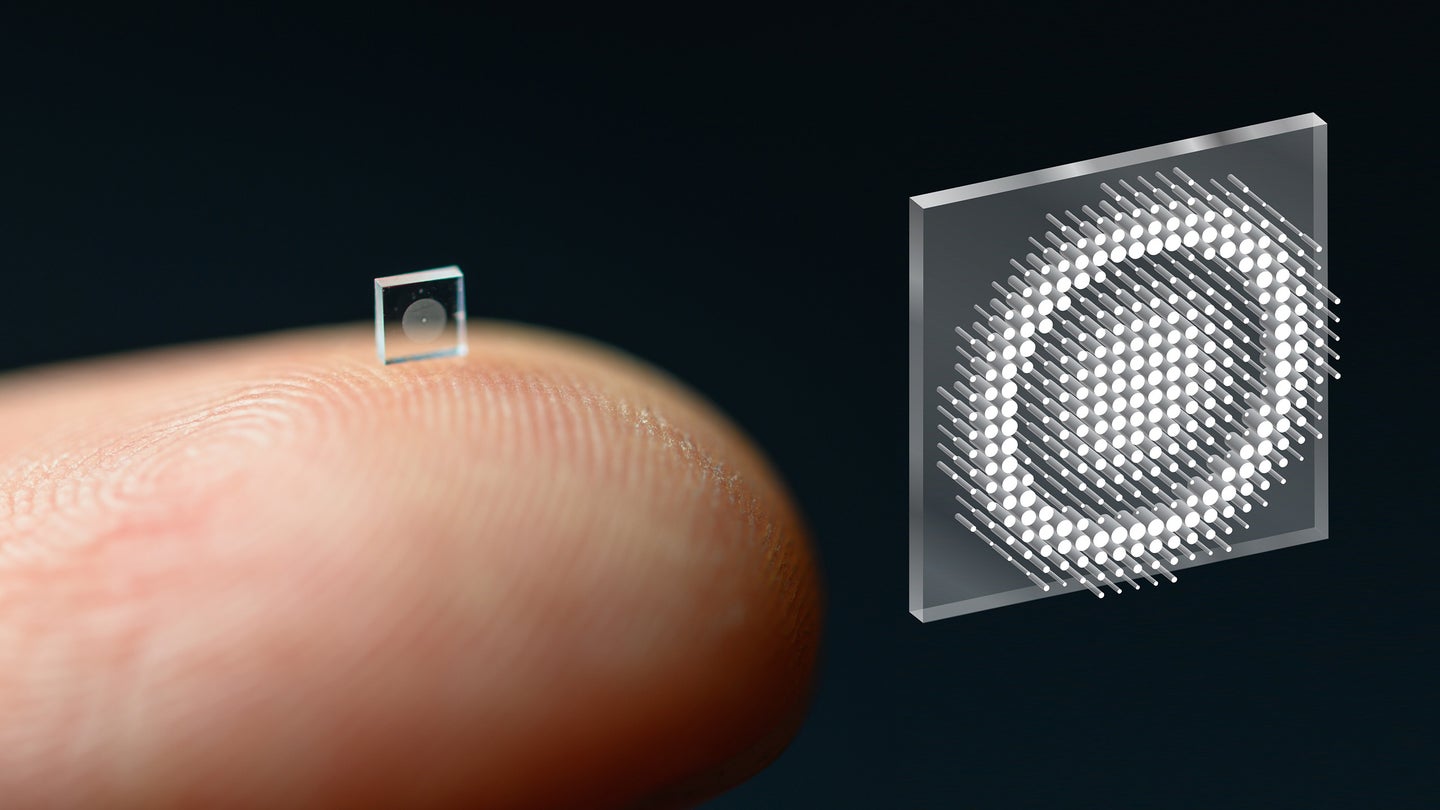

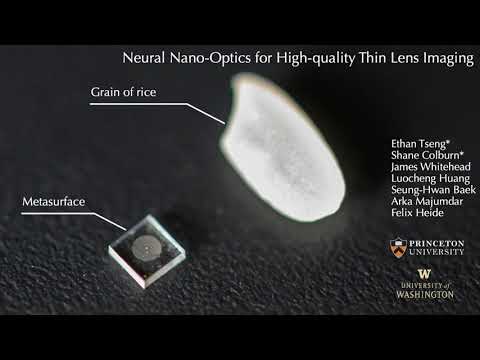

Designed by a team of researchers from Princeton University and the University of Washington, the new system is detailed in a paper published last week in the peer-reviewed journal Nature Communications. It replaces the complex and bulky compound lens found in most cameras. With a metasurface that’s just 0.5mm wide, the camera is studded with 1.6 million cylindrical “nanoposts” that shape the light rays passing within.

The metasurface camera is said to provide image quality on par with a conventional camera and lens that is 500,000 times larger in volume. After comparing the sample images in the paper and Youtube video above, we’d say that’s perhaps a little too generous, but we don’t want to take away from the team’s achievements in the least, as they’re certainly impressive.

Compared to other cameras

The combination of a 2/3-inch sensor and an Edmund Optics 50mm f/2.0 lens used to provide the conventional camera comparisons still has noticeably better image quality, especially in the corners. But at the same time, the metasurface camera’s results are deeply impressive when bearing in mind its spectacular size advantage. And the results it provides are also far in advance of what was achieved by the previous state-of-the-art metasurface camera just a few short years ago (see below).

Compared to the earlier metasurface cameras, the new version differs in the design of its individual nanoposts as well as in its subsequent image processing. The nanotubes’ structure was optimized using machine-learning algorithms which prioritized image quality and field-of-view. The image processing algorithms, meanwhile, adopted neural feature-based deconvolution techniques. Finally, the results of the new image processing were fed back to allow further improvements to the nanotube structure.

These strategies have clearly worked well, yielding a huge step forward from the results possible with past efforts. While the compound optic still has a pretty obvious advantage in terms of fine detail, color, contrast, vignetting and corner sharpness, the gap between technologies is certainly shrinking.

What’s next?

Next up, the research team is planning to increase the metasurface camera’s computational abilities. This should allow not only another step forwards in terms of image quality but also other capabilities such as object detection.

In the longer term, the study’s senior author, Felix Heide, suggests that the goal is to break into the smartphone market. Heide predicts that one day, you could see the multiple cameras in your smartphone replaced by a single metasurface that turns its entire rear panel into a camera. Is the era of awkward camera bumps soon to meet its end? We can only hope!