Carnegie Mellon Computer is Learning by Scanning Millions of Images

Meet the Never Ending Image Learner (NEIL)

Trying to teach a computer what we might identify as common sense is a tricky proposition. How do you teach a machine that even though a Chihuahua and a Great Dane look very different, they’re both dogs? But that a cat is something else entirely? How do you give it the conceptual framework for not only identifying images, but also their context? That’s what Carnegie Mellon’s Never Ending Image Learner (NEIL) computer program is doing.

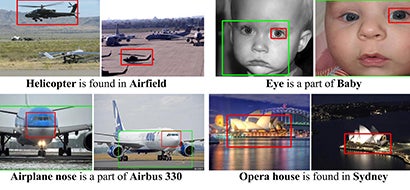

The NEIL has been running since July, and has trawled three million images off the web, and is using them to establish a visual database. It does a lot more than just identify what’s in a picture, and is also able to establish relationships. So it can learn that one thing looks similar to another, or that zebras are often found in the savannah, are that wheels are parts of cars.

This database can be found on NEIL’s website and is a mix of the quite insightful (“Bamboo_forest can be / can have Vertical_lines.”) to the slightly bizarre (“Bullet_train can be a part of Air_hockey.”).

In a press release, Abhinav Gupta said:

NEIL relies on humans to help identify when things are wrong with its algorithms, which you can help with on the website.

So far, NEIL has analyzed three million images, indentifying 1,500 types of objects, and 1,200 types of scenes. It’s runs 24 hours a day crunching these images, powered by two computer clusters that include 200 processing cores.