Death of the Photo Editor? Eyetracking Software Learns What Photos Are Worth Publishing

The National Press Photographers Association just released a study that used eyetracking software and surveys to determine what audiences value...

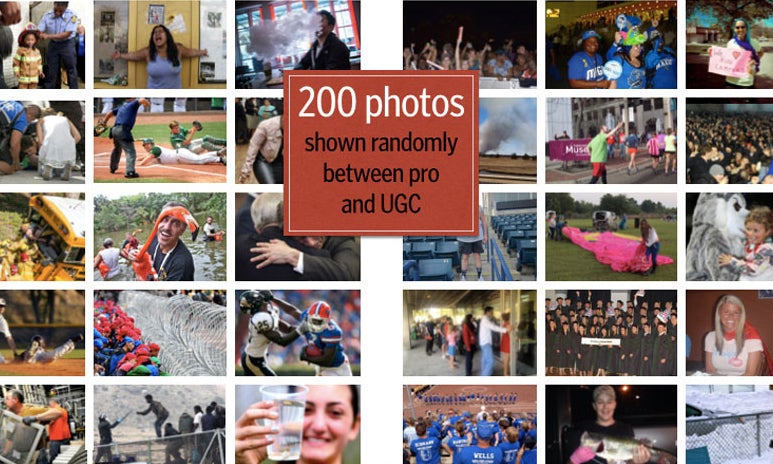

The National Press Photographers Association just released a study that used eyetracking software and surveys to determine what audiences value and respond to in journalistic imagery. Researchers at the University of Minnesota showed 52 test subjects 200 news images, half made by professionals and others by amateurs—user generated content (UGC). Infrared cameras recorded nearly 20,000 eye movement to determine what images and what within each image was most engaging, and how test subjects interacted with captions. They followed up with surveys asking subjects to rate images on quality and shareability. Similar studies have been used in the past to determine how users interact with tablet content.

The initial findings seem to reinforce fairly conventional newsroom wisdom. Quality matters—audiences can almost always tell when an image was made by a professional, and are far more likely to appreciate it. People like looking at faces, particularly if they are emotive. And people appreciate thoughtful captions, as well as “special access” to a scene that is difficult to cover.

Though this sample size is very small, it is foreseeable that with a large enough data set, perhaps gathered through some consumer interface (i.e. Facebook), someone could use this information and develop an imaging application that sifts through, and even sequences, “best” news images. Imagine an app that in the aftermath of a breaking news event, determines which of the available images leads. Digital newsrooms have come a long way from the era of print when reader response came a day late via snail mail; now they can instantly react to instant feedback—that is, clicks and comments. Knowing in advance through mass data what will work and what won’t, could take it a majorly disruptive step further. Would publishers and shareholders continue to bank on human instinct? What kind of echo chamber might we find ourselves in otherwise?

The study was published in the first of a four-part series, which we’ll be anxiously awaiting to see the 200 images shown to the test subjects in coming weeks.