What Photography Will Look Like By 2060

Imaging tomorrow will look nothing like it does today.

It is Sunday, July 18, 2060:

Old-Timers’ Day, Yankee Stadium.

You’re here with your old photoglove, getting some action shots from your seat in the upper deck without even putting down the $40 beer in your left hand. What would look to us like normal sunglasses are in fact camera-equipped goggles with a heads-up display on the inside of the right lens.

Pointing the index finger of the photoglove, which is impregnated with tiny pyramid-shaped crystal microlenses surrounding nano-sized image sensors, you draw a frame around each bit of action so that a window appears to float in front of your goggles.

Blink: You bat your right eye to capture pictures of 86-year-old Derek Jeter throwing out the first pitch from deep center field, demonstrating his newly regenerated shoulder muscle.

Blink: A suicide squeeze.

Blink: A diving catch.

Applause is muted because the whole stadium is full of people pointing and winking at the field.

Back home, you begin to edit your pictures using a surround-vision display. The merging of the computer and the camera has brought computational photography to fruition, so it doesn’t much matter that batters were distant and sometimes had their backs to you, because, using your glove, you captured a complete three-dimensional image of the players via thousands of tiny, wireless, GPSenabled microcams that have been spread like glitter all over the field.

Choose an image from a 2D desktop display and it appears before you in 3D, lifesize, projected into an invisible fog from at least six laser projectors mounted in the walls. A joystick with a trigger and thumb buttons lets you change focal plane and depth of field, zoom in for close-ups, even alter the angle of view. Each picture is actually a few seconds of 3D video, since when you blinked the recording of that scene began five seconds before the blink and continued five seconds after. At 1,000 frames per second, each “capture” gives you the choice of separately viewing-and walking completely around-10,000 stills or replaying the action as if you were positioned on the field…

Based on current research directions, this is one possible photo future.

Where photography goes from here depends not just on what dedicated shooters want, but maybe more so on the whims of a billion app-happy cellphone junkies, each one of whom is already getting a much better camera every time they upgrade. The increasing miniaturization and simultaneous expansion of camera capabilities is now driving the outer edges of the photo frontier.

Where will it go? A number of possible tracks are discernible, but the widest vector leads toward increasing the camera’s lightgathering function by several orders of magnitude.

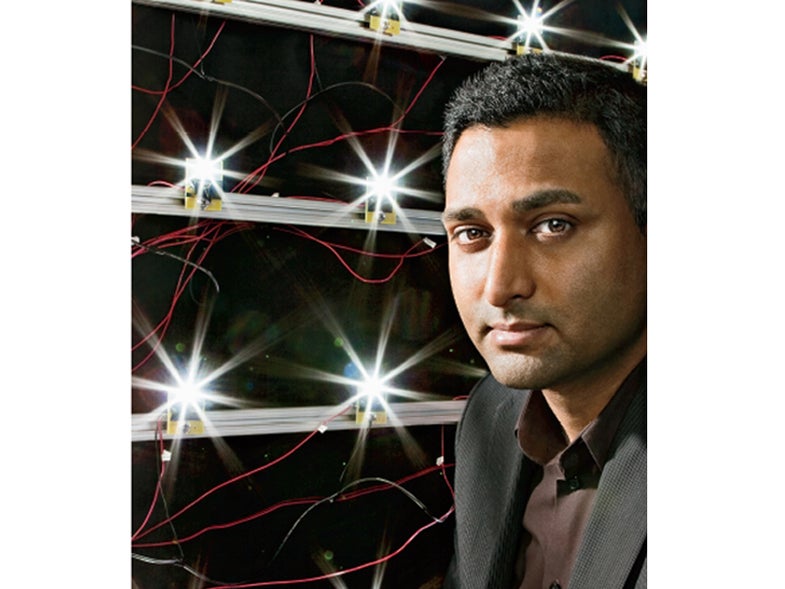

“It’s all about getting more information from the image sensor,” explains Abbas El Gamal, an electrical engineering professor at Stanford University, whose research group has been developing ways to make CMOS sensors both smaller and more sensitive, capable of providing depth maps for super-3D image capture.

Though photographs in the near future will still be composed by people holding cameras, it will gradually become more accurate to say pictures were computed rather than “taken” or “captured.”

But this requires broadening the dynamic range of light and motion detectable by the sensor chip. “Without information,” says El Gamal, “there is no hope.”

Within this context, clues from current research in the realm of computational photography, combined with the predictions made by electrical engineers and technology visionaries, can be projected along a future timeline of what we can reach for-and maybe even grasp:

2015 Into The Next Dimension

There are 300 million cellphones in America, all of them containing increasingly sophisticated cameras. Pixels have gotten so small that 50MP point-and-shoots are common, and there are pro cameras in the gigapixel range.

High-def video is quickly evolving into 3D, jump-started in 2004 by the establishment of the global Network of Excellence in 3DTV, an eddy of manufacturers and researchers, including Holografika, Mitsubishi, Philips, Texas Instruments, and some two dozen others.

“You know how when you think of the past, you see it in black-andwhite images?” says Nils Lassiter, an application engineer with Photron, a maker of ultra-highspeed video cameras. “In the future, thinking of the past will bring up 2D images, as if people were flat back then.”

European Union-funded R&D on “autostereo” 3DTV-which doesn’t require colored glasses to combine the two images required for traditional stereoscopy-has been focused on head-tracking, an extension of face-detection technology. Laser-based holographic projection, sending separate images from the display to each eye of the viewer (or multiple viewers), is just entering the market.

Still photos are following that lead toward autostereo 3D representation based on head-tracking that’s built into the picture frame. Photographic prints on paper are competing with progressively thinner wireless 3D interactive display screens.

2020 Rise Of The Organic Camera

The trend toward using one iPhone-like device for multiple wireless tasks has almost peaked as the use of cameras declines, even though compacts are now the size of pocket change. (Pro cameras are about the size they were in 2009, but the multiplicity of lenses has been replaced by a single omni-lens.)

“At first, there will be one device for everything,” explains Ramesh Raskar, associate professor at the MIT Media Lab who is co-director of the Center for Future Storytelling. “Later it will fragment all over the body, the camera to the eye, the phone and audio to the ear. Some people will have them implanted, some not.”

Lenses have shrunk and moved closer to merging completely with image sensors, which are now mostly based on organic (carbon-based) compounds and nano-sized organic photo diodes. The sensor’s flexibility allows it to be formed into spherical shapes, more closely resembling and functioning like the human retina.

“The spherical sensor gives you a very wide field of view, with everything in focus, similar to what the human eye sees,” says Daniel Palanker, associate professor of ophthalmology at Stanford University. “Lenses will always be important because you cannot capture a picture without good focus. Today, we require expensive and bulky lens elements to correct for the curvature of the focal plane. But if you instead use a spherical sensor, you can capture sharp images with much less expensive optics.”

Breakthroughs in the power and miniaturization of solar cells have produced microbatteries that last for years without recharging. Deuterium, derived from seawater, has become the go-to isotope for power, but bio-batteries (which amplify the electrical charge emitted by bioengineered bacteria) and air batteries (based on the interaction of a lithium, zinc, or aluminum compound with oxygen molecules in the atmosphere) are on the horizon.

2030 In The Eye Of The Beholder

The fragmentation of wireless capabilities begins to take the camera and the phone out of people’s hands, attaching or implanting the rice-grainsized machines to their eyes and earlobes.

Competing for market share are light, wraparound sunglasses with 4D lightcapture capabilities. This means a thin layer of image sensors can now record not just the color and intensity of the visible spectrum, but also the direction and angle of reflected light in the wider spectrum, including ultraviolet and infrared.

You frame a photograph by holding your extended forefingers and thumbs in front of you, like an artist composing the outlines of a painting. Tripping the “shutter”- now really just a “save” function- can be done with a verbal command, such as “Click.”

Algorithms are computing a visual version of surround sound, allowing images to be viewed from perspectives other than where the camera was actually located. Photos are now almost identical to what the eye sees, but are also “relightable,” which means making compromises to achieve high dynamic range is no longer an issue.

Depth of field is controllable, letting viewers, rather than photographers, see their own versions of the photo. “Photos can be displayed in a way that reflects your mood,” says Ramesh Raskar of the MIT Media Lab.

Blurring of an image due to motion is both correctable and replayable as the “film-rooted distinction between ‘still’ cameras and ‘video’ cameras,” disappears, as in a prediction in the STAR (State of the Art) research report on computational photography published in 2006 in the journal Eurographics.

Photography and holography have now merged, requiring something more than flat screen displays. FogScreen, an early-21stcentury company whose patented “immaterial” display is a free-floating screen of dry fog you can walk through, has seen its technology evolve into 6D panoramic displays filling whole rooms in which the viewer can walk around freely among projected images. These “immersive” displays have further blurred the line between real and virtual existence.

2040-2050 Images With A Wave Of A Wand

The camera as a device you carry has completely disappeared. Image sensors have become part of the literal fabric of everyday life, woven into gloves and photo cloth, which produce 3D images after you rub them over an object such as a human face.

We now have “camera wallpaper” and live Google Earth, which records continuously and allows the viewer to remotely select and experience virtually any public scene in the world. Displays have become tiny laser projectors beaming images directly onto your retina.

Matt Hirsch, a graduate student in the field of information ecology at MIT, foresees “painting” a photograph by waving “a tiny wand containing an infrared laser and terabytes of data storage,” he says.

“Cameras in the environment will work collectively to image the scene from your perspective, and from other perspectives of interest, and then transfer the resulting data to your wand. You could then dial back from this point to see the scene at other times in the past.”

Image sensors encased in microlenses and connected to GPS receivers are now sprinkled like confetti almost everywhere. The concept of privacy is in flux and widely disputed. Researchers are getting close to being able to tap directly into the neural pathways of vision in your eyes and brain, accessed by an external probe held gently against the temple, like those used today for ultrasound imaging. Everything you see is now downloadable.

The concept of the camera as an actual object is so 2009.